The Apple Vision Pro’s mixed reality headset makes good Wi-Fi connectivity more important than ever

07 June 2023 | Research

Article | PDF (3 pages) | Fixed Services| Video, Gaming and Entertainment

At its Worldwide Developer Conference (WWDC) 2023 (held 5–9 June 2023), Apple announced the launch of its first head-mounted display, the Apple Vision Pro. This mixed reality (MR) device is expensive and may not see substantial sales immediately.

However, its arrival has the potential to act as a ‘catalyst event’, propelling the development of augmented reality (AR), virtual reality (VR) and metaverse applications and expediting mass-market adoption. Over the next 2 years, Apple’s extended reality (XR) proposition will undergo significant evolution, with Wi-Fi connectivity playing a crucial role in shaping the user experience.

The Apple Vision Pro headset will ship in 2024 and is fundamentally different to previous XR headsets

During the event, Apple CEO Tim Cook described the Apple Vision Pro as an AR headset that also blends virtual and real-world environments, making it more appropriately labelled as an ‘XR’ device. It will ship in the USA in 2024, with other markets following, with an initial retail price of USD3499.

This is significantly more expensive than rival headsets from the likes of Meta (Quest Pro USD999) and PICO (Pico 4 USD430). Like Meta’s Quest Pro, Apple initially proposes that Vision Pro can act as a laptop replacement in enterprise settings. Demonstrations of the device at the event primarily focused on projecting 2D applications and virtual screens onto physical space.

Apple has a strong track record in developing intuitive user interfaces, and the Vision Pro continues this trend. Unlike rival XR devices, it eliminates the need for peripherals and relies on eye and hand tracking, as well as voice control. This unique control system sets applications developed for visionOS, Apple's new spatial operating system, apart from other platforms. Developers must build applications specifically for Apple's OS, rather than porting existing controller-based applications.

To achieve mass-market take-up of XR, and the metaverse, buy-in from three groups is required

Several companies have attempted to develop mass-market XR devices and services for over a decade, with some limited success. For example, Meta’s Quest Content Store, which sells applications for its Oculus VR headsets, surpassed USD1.5 billion in cumulative revenue in 2022.

There are a number of barriers to mass-market take-up of XR devices and services and to metaverse-type visions. The cost barrier is a particularly important one but it is part of a wider issue: establishing a self-sustaining business case for mass-market take-up.

To achieve this, Apple, and Meta, will eventually need all three of the following groups to buy into their vision in order to scale.

- Connectivity providers. At its core, a Vision Pro could be used without internet connectivity for on-device games, content and experiences, but its primary use-case is for connected applications. XR devices heavily rely on high-speed, low-latency, reliable connectivity, especially for real-time interactions in XR and, in future, for metaverse applications. Connectivity to the device (which includes both the on-premises connectivity and the broadband access connection) becomes crucial when graphics rendering moves to the cloud. This is not so much of a concern for the first-generation Vision Pro but will be important in future. However, good Wi-Fi connectivity is still a potential point of failure for the first-generation Vision Pro.

- Developers and content creators. Building an ecosystem of developers and creative individuals to create compelling content and applications is essential. Without engaging content, end users will not be motivated to purchase and use XR devices and services.

- End users. End users, including consumers and enterprises, are the primary revenue source for XR experiences and services. They need to see increased utility in terms of productivity or entertainment to justify investing in XR.

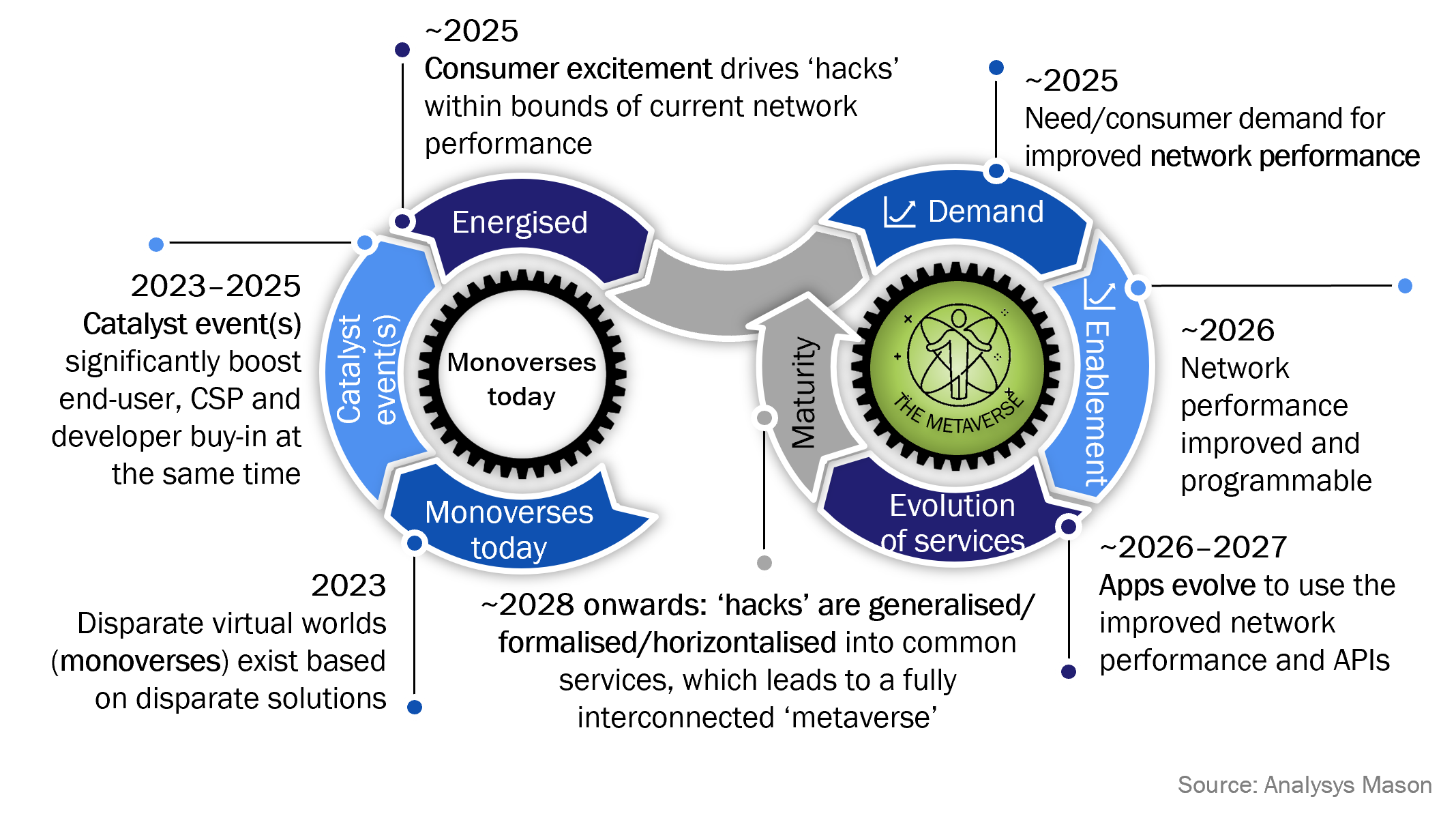

Meta's metaverse vision faced obstacles due to a lack of sustained buy-in from these three groups. Telecoms operators had mixed responses, developers showed interest and the gaming sector aligned well with MR development and the metaverse. However, end-user adoption remained insufficient. This does not mean the end of the metaverse vision, merely that a critical mass of buy-in had not been reached to make the business case self-sustaining, as indicated in Figure 1, which shows how catalyst events could lead to disparate XR platforms (‘monoverses’) eventually connecting up to form a single unified metaverse.

Figure 1: Indicative timeline for the evolution of metaverse and AR/VR applications

‘Catalyst events’ are events that boost the buy-in of one or more of the above groups. While Meta's pivot towards metaverse services strengthened the first two categories, it failed to capture end-user buy-in. In contrast, Apple excels at generating end-user interest, even if mass-market buy-in may occur in later generations of the Vision Pro. Furthermore, Apple’s dependency on the buy-in of telecoms operators is not as great as Meta’s: Apple is aiming not for a unified, standards-based metaverse, but for a walled-garden XR ecosystem that it controls.

Apple XR will evolve significantly over the next 24 months and Wi-Fi will be a key ‘pain point’ for delivering high-quality experiences

The initial release of the Apple Vision Pro in 2024 will not revolutionise the XR market on its own. However, what happens subsequently might: it will stimulate developer involvement and content creation for the platform. Apple's partnership with the games engine Unity is a significant step toward this. As a result, the device’s usage will shift from being primarily AR-based, projecting 2D screens in 3D space, to a more-immersive experience with greater involvement of 3D and MR elements within the next 2 years. Apple’s brand reputation and ecosystem-building capabilities, combined with its initial positioning of the device (not solely as a 3D gaming device) will likely sustain consumer interest.

The Apple Vision Pro will primarily be used in static locations and accordingly it is most likely to be connected via Wi-Fi to a fixed broadband connection, rather than 5G (there is no information, yet, on any connectivity option for the device beyond Wi-Fi). Local rendering on the device itself limits the impact on network traffic to some extent. This aligns with Apple’s privacy-focused approach: third-party applications lack access to eye-tracking functionality or direct environmental information. These factors hinder cloud-based rendering in this first generation of device.

However, as rendering transitions to edge/cloud locations in the future, network traffic from XR devices will grow rapidly. In addition, despite local rendering, the Vision Pro will still have an impact on network traffic, increasing the demand for 4K+-per-eye video content. The device’s ability to record ‘3D spatial videos’ will also prove popular and will inevitably generate large amounts of traffic. Furthermore, as more social and gaming applications are released, the demand for lower-latency connectivity technologies will increase.

Here, we come to the weak point in people’s overall experience of the Apple Vision Pro, and one that is in operators’ control: it is not the broadband connection into people’s premises that will limit how XR will be used, but instead, it will be the way that broadband is distributed in-home. Consumers will demand improved Wi-Fi connectivity to support these devices, and operators must ensure that they offer full-home, reliable, fast, low-latency connectivity to support them.

Article (PDF)

DownloadAuthor

Martin Scott

Research DirectorRelated items

Article

A move away from inflation-linked broadband pricing in the UK may actually accelerate ARPU growth

Case studies report

Optimising the operator TV opportunity: case studies and analysis (volume II)

Case studies report

Fixed broadband retention strategies: case studies and analysis